Robot Learning from Offline Data

In recent years, image recognition and language processing technologies based on deep learning have had a significant impact on a variety of industries. Many AI models that are changing the industrial structure are characterized by their success in learning general-purpose features with the help of big data. Image recognition using deep learning can extract generic features using large datasets and can be adapted to a wide variety of tasks.

On the other hand, deep reinforcement learning is a framework that uses deep learning not only for recognition but also for decision making, and it has been attracting increasing attention, including AlphaGo, which defeated top Go players in Go. Learning by repeated interaction with the environment, which is used in reinforcement learning, is considered to be difficult to apply to the real world, where trial and error is dangerous, such as in automated driving and the medical field.

Therefore, it is expected to develop reinforcement learning techniques that can learn general-purpose features from a large data set collected in advance, such as in image recognition technology.

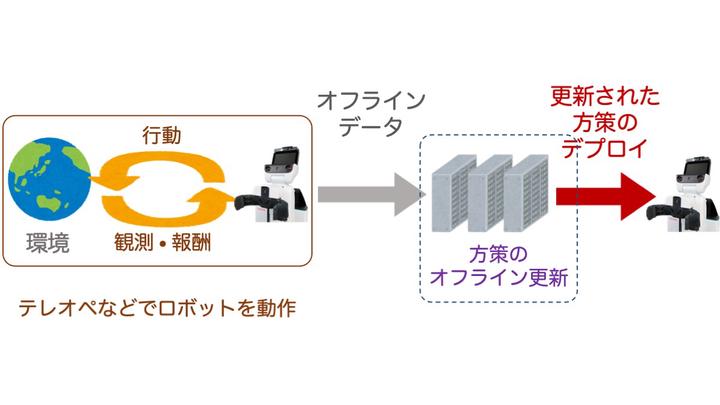

Offline reinforcement learning is a framework for reinforcement learning that learns models for decision making using only pre-collected data sets (offline data) without interacting with the environment during training. The ability to learn without access to the environment is highly desirable for solving many real-world problems where trial-and-error is not allowed because large data sets can be used for training. On the other hand, the difference between the off-line data used for training and the learned measures deployed in the environment has a significant impact on the accuracy of the model, which is a major challenge.

Recent work at TRAIL includes “Deployment-Efficient Reinforcement Learning via Model-Based Offline Optimization” (accepted for publication in ICLR2021). This project points out that the number of deployments to the real world is an important metric in reinforcement learning for real-world applications, and proposes the concept of deployment efficiency. The proposed method, BREMEN, is a model-based method that initializes measures with Behavioral Cloning measures and introduces a mechanism to update parameters conservatively, and achieves high deployment efficiency. For more details, please refer to the paper.